Some time ago I posted a blog commenting on a possible design for interconnecting multiple Azure regions by means of Network Virtual Appliances (NVAs) and the Azure Route Server (ARS), where I used an overlay tunnel between the NVAs with VXLAN as encap protocol. I have received multiple questions to whether it would be possible to do it without the tunnels. The answer is yes, but it’s going to be quite a ride (sneak preview: I still prefer the VXLAN option). Come with me!

Before we start, I would like to thank my friend Jorge for his post on different topologies with Azure route server, what I am going to do is mainly diving just a bit deeper in the details he already explains there. This is the topology I discussed in my previous post with the VXLAN overlay, where you see in the middle the overlay tunnel represented in purple:

Why do we have that tunnel there? The key is in the effective routes in the NVA NICs. For example the NIC of the NVA in region 1 will look like this:

❯ az network nic show-effective-route-table --ids $hub1_nva_nic_id -o table Source State Address Prefix Next Hop Type Next Hop IP --------------------- ------- ---------------- --------------------- ------------- Default Active 10.1.0.0/20 VnetLocal Default Active 10.1.16.0/24 VNetPeering Default Active 10.1.17.0/24 VNetPeering VirtualNetworkGateway Active 10.1.0.0/16 VirtualNetworkGateway 10.1.1.4 VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.1.4 VirtualNetworkGateway Active 192.168.1.0/24 VirtualNetworkGateway 10.2.146.35 Default Active 10.2.0.0/20 VNetGlobalPeering

Note the route for 10.2.0.0/16 (the other region), pointing to itself. If the NVA in region 1 sends any packet to region 2 over this NIC, that route will return it to the NVA, thus creating a routing loop. You would be tempted to disable gateway route propagation for this NIC, but then the NVA wouldn’t know how to reach onprem either (the 192.168.1.0/24 route), since that setting affects routes injected into the subnet by the route server and any Virtual Network Gateway (VPN or ExpressRoute). The VXLAN tunnel trick obfuscates the actual packet destination IP from the Azure SDN, hence making routing possible (refer to this blog for more details).

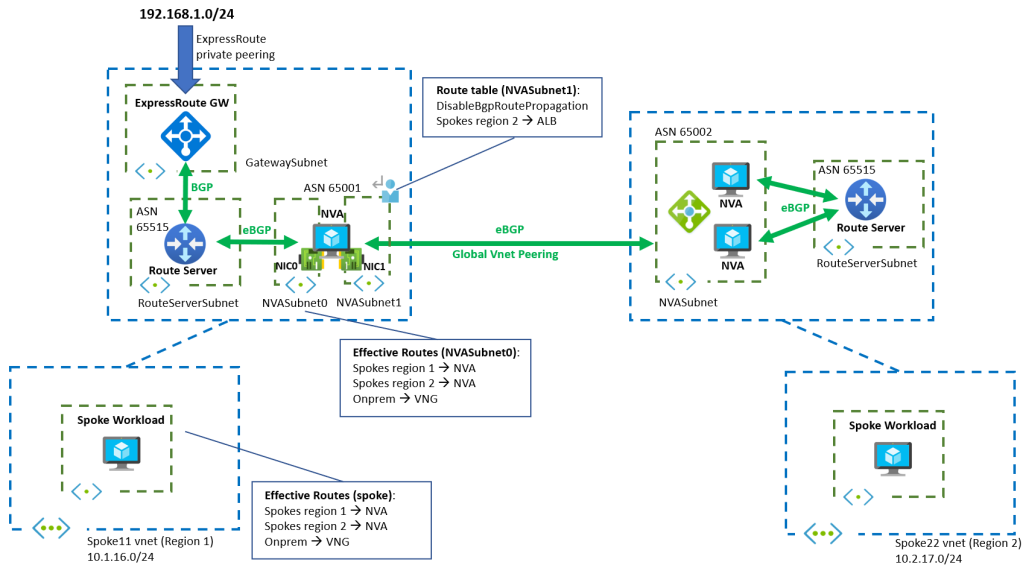

How to do this without VXLAN tunnels? We will need at least 2 NICs in the NVA. In one we will enable gateway route propagation, so that the NIC still knows how to reach the on-premises network, but in the other one we will disable it, so that no routing loops are created when packets from region 1 to region 2 hit it. Something like this:

How does the traffic from a spoke in region 1 (spoke11) to a spoke in region 2 (spoke22) flow? Let’s walk the packets: First, the VM in spoke11 will send the packets to its only NIC, that has the routes injected by the Azure Route Server:

❯ az network nic show-effective-route-table --ids $spoke11_vm_nic_id -o table Source State Address Prefix Next Hop Type Next Hop IP --------------------- ------- ---------------- --------------------- ------------- Default Active 10.1.16.0/24 VnetLocal Default Active 10.1.0.0/20 VNetPeering VirtualNetworkGateway Active 10.1.0.0/16 VirtualNetworkGateway 10.1.1.4 VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.1.4 VirtualNetworkGateway Active 192.168.1.0/24 VirtualNetworkGateway 10.2.146.35

Packets to region 2 will hit the highlighted route for 10.2.0.0/16, which is pointing to the NVA in region 1 (because it was advertised by the NVA to the Azure Route Server). Now comes the good part: the NVA talks to the Azure Route Server on one of its NICs (NIC0), and to the NVA in region 2 with the other NIC (NIC1). Internal routing in the NVA makes sure that the NVA in region 2 is reached over NIC1 (which maps to eth1 in the OS):

jose@hub1nva:~$ ip route default via 10.1.1.1 dev eth0 proto dhcp src 10.1.1.4 metric 100 10.1.0.0/20 via 10.1.1.1 dev eth0 proto bird 10.1.1.0/24 dev eth0 proto kernel scope link src 10.1.1.4 10.1.11.0/24 dev eth1 proto kernel scope link src 10.1.11.4 10.1.11.4 via 10.1.11.1 dev eth1 proto bird 10.1.16.0/24 via 10.1.1.1 dev eth0 proto bird 10.1.17.0/24 via 10.1.1.1 dev eth0 proto bird 10.2.0.0/20 via 10.1.11.1 dev eth1 proto bird 10.2.0.0/16 via 10.1.11.1 dev eth1 proto bird 10.2.0.4 via 10.1.11.1 dev eth1 proto bird 10.2.0.5 via 10.1.11.1 dev eth1 proto bird 10.2.16.0/24 via 10.1.11.1 dev eth1 proto bird 10.2.17.0/24 via 10.1.11.1 dev eth1 proto bird 168.63.129.16 via 10.1.1.1 dev eth0 proto dhcp src 10.1.1.4 metric 100 169.254.169.254 via 10.1.1.1 dev eth0 proto dhcp src 10.1.1.4 metric 100 192.168.1.0/24 via 10.1.1.1 dev eth0 proto bird

As a consequence, packets forwarded by the NVA to region 2 will hit NIC1 (and not NIC0). NIC1’s subnet has a route table with gateway route propagation disabled, so it will not learn the routes injected by Azure Route Server, and hence there will be no routing loop. Great, isn’t it?

Wait a minute: if NIC1 doesn’t learn the routes from ARS, it doesn’t know either how to arrive to the spokes in region 2 (they are not directly peered with the hub in region 1)! Ah, but that is easy: we can create a User Defined Route (UDR) pointing to the NVAs in region 2. But to which NVA? We want high availability, don’t we? With BGP, ECMP would do that magic, but now we are back to UDRs, so we need the Azure Load Balancer represented in the picture as next hop for our UDR.

Now, you will ask whether the return traffic is symmetric. Meaning that if both NVAs in region 2 are active, will the return traffic from spoke22 hit the same NVA in region 2 as the inbound traffic from spoke11? The answer is that the mechanisms to select the NVA are different, so traffic can indeed be asymmetric. For packets from spoke11 to spoke22, the Azure Load Balancer in region 2 will decide which NVA in region 2 handles the traffic. For the return traffic from spoke22 to spoke11, ECMP in the NIC in spoke22 will decide which NVA to send packets to. Both decisions are orthogonal to each other.

The next trick to guarantee traffic symmetry is configuring Source NAT in the NVAs in region 2. Otherwise, since traffic symmetry is not guaranteed, you shouldn’t run stateful packet processing rules in the NVAs, or you should not use the Azure Route Server to route traffic (and instead use UDRs from spoke22 that point to the same Azure Load Balancer, so that traffic symmetry is restablished).

For the return traffic from the NVAs in region 2, the same constructs would be required as what was previously explained for region 1, but I will let that to the reader’s imagination.

Hopefully the complexity of this scenario explains why I like running an overlay across the NVAs in Azure. That removes the dependency between routing at the NVA level (BGP) and at the NIC level (Azure SDN), so it leaves it up to the NVAs where to route traffic without any dependency on the effective route tables in their NICs. For example, you don’t need any static routes, and all routing is dynamic. Agreed, creating those VXLAN tunnels is not going to be a piece of cake, so I guess I am trading one complexity for another. Which one do you prefer?

Thanks for reading!

[…] Moreno ( @erjosito ) escreve sobre o design multirregional com o Azure Route Server sem uma […]

LikeLike

[…] this article I have focused on the overlay option, but in others (for example in Multi-region design with ARS and no overlay) I have explored in depth what the solution with User Defined Routes would look like. All in all, I […]

LikeLike