I have had some questions around a common theme asked by some large Azure customers. These refrains might sound familiar to you: “I have run out of IPv4 addresses“, “My network team can only allocate so many IPs for Azure“, “How can I reuse IP space in Azure?“.

If they do, I have a hack for you. Let me start saying that I wouldn’t say by any means that what follows is a best practice, but this little trick might come handy in certain situations. In essence, this is what we are trying to achieve:

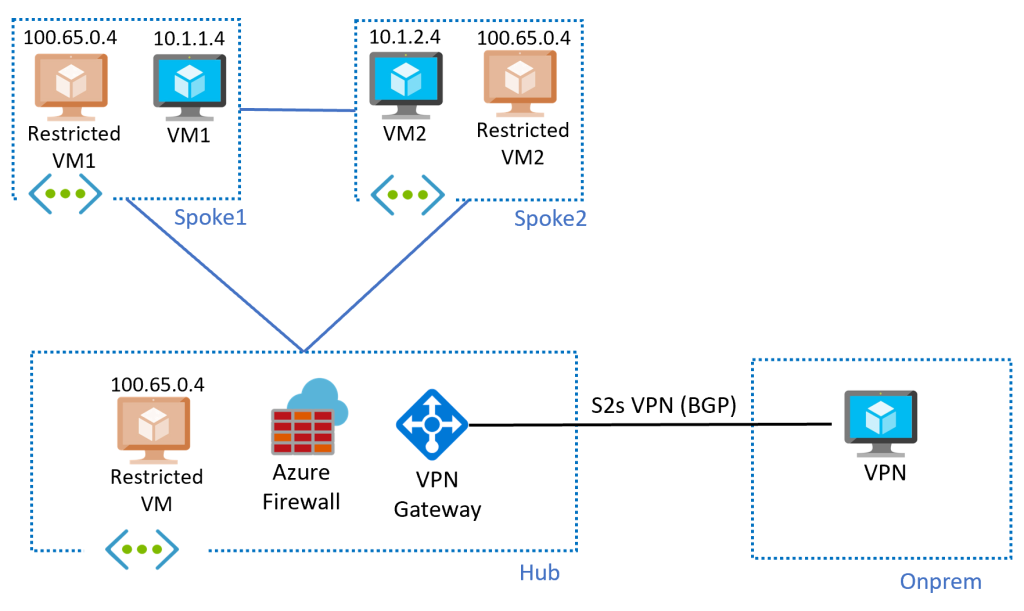

Let me explain:

- The architecture follows a hub and spoke pattern with connectivity to on-prem from the hub. In this example I have a VPN with BGP, the design is extrapolable to ExpressRoute

- In each VNet I have some VMs with overlapping IP addresses (the “restricted VMs” 1 and 2 have the same IP address

100.65.0.4), and some other VMs with non-overlapping IP ranges - VM1 and VM2 can communicate to each other, to Internet, and with on-premises networks

- The “restricted” VMs in the spokes can only communicate to other systems in their own VNet, but not outside of it

- The “restricted” VNet in the hub can communicate to Internet and to on-premises, but not to the spokes

How would you do this? Normally you cannot peer two VNets with the same range. Even if the hub VNet didn’t have the 100.64.0.0/10 prefix defined, it could only be peered with one single VNet having it, but not with two (spokes 1 and 2). So how can we do this?

Does this work?

Let’s have a look at the VMs we have:

❯ az vm list-ip-addresses -g $rg -o table VirtualMachine PublicIPAddresses PrivateIPAddresses ---------------- ------------------- -------------------- clientvm 51.124.223.105 192.168.10.4 dnsserver 20.234.191.116 192.168.53.4 hubprivatevm 108.143.7.75 100.65.0.4 mynva 23.97.190.223 172.16.0.4 spoke1clientvm 20.160.110.13 10.1.1.4 spoke1privatevm 20.101.149.117 100.65.0.4 spoke2clientvm 51.136.19.53 10.1.2.4 spoke2privatevm 20.101.145.21 100.65.0.4

As you can see, there are three VMs that have the same IP address 100.65.0.4. These “private” VMs have limited connectivity, only to resources inside their own VNet. For example, the “private” VM in spoke1 can reach the other VM in the same VNet (10.1.1.4), but literally nothing else:

jose@spoke1privatevm:~$ ping -c 1 10.1.1.4 PING 10.1.1.4 (10.1.1.4) 56(84) bytes of data. 64 bytes from 10.1.1.4: icmp_seq=1 ttl=64 time=1.44 ms --- 10.1.1.4 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 1.445/1.445/1.445/0.000 ms jose@spoke1privatevm:~$ ping -c 1 10.1.2.4 PING 10.1.2.4 (10.1.2.4) 56(84) bytes of data. --- 10.1.2.4 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms jose@spoke1privatevm:~$ ping -c 1 172.16.0.4 PING 172.16.0.4 (172.16.0.4) 56(84) bytes of data. --- 172.16.0.4 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms jose@spoke1privatevm:~$ curl ifconfig.co curl: (7) Failed to connect to ifconfig.co port 80: Connection timed out

And yet, the other VM in the same VNet with a non-overlapping IP address can reach all of the environment, including Internet and on-premises:

jose@spoke1clientvm:~$ ping -c 1 100.65.0.4 [18/1519]PING 100.65.0.4 (100.65.0.4) 56(84) bytes of data. 64 bytes from 100.65.0.4: icmp_seq=1 ttl=64 time=0.893 ms --- 100.65.0.4 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 0.893/0.893/0.893/0.000 ms jose@spoke1clientvm:~$ ping -c 1 10.1.2.4 PING 10.1.2.4 (10.1.2.4) 56(84) bytes of data. 64 bytes from 10.1.2.4: icmp_seq=1 ttl=64 time=3.49 ms --- 10.1.2.4 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 3.491/3.491/3.491/0.000 ms jose@spoke1clientvm:~$ ping -c 1 172.16.0.4 PING 172.16.0.4 (172.16.0.4) 56(84) bytes of data. 64 bytes from 172.16.0.4: icmp_seq=1 ttl=63 time=6.78 ms --- 172.16.0.4 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 6.781/6.781/6.781/0.000 ms jose@spoke1clientvm:~$ curl ifconfig.co 20.160.129.121

But how does this work exactly?

Meet Azure Virtual Network Manager

We will use here a neat little trick of AVNM, which is the fact that it does a special type of VNet peerings that do support overlapping IP addresses (unlike traditional VNet peerings), with the obvious caveat that communication between those overlapping ranges will not work. However, the rest of the IP space will work just fine.

We can have a look at the configurations in AVNM. First, you can list your AVNM instances, network groups and deployments:

❯ az network manager list -g $rg -o table

Description Location Name ProvisioningState ResourceGroup

------------- ---------- ------- ------------------- ---------------

westeurope dnsavnm Succeeded DNS

❯ az network manager group list --network-manager-name dnsavnm -g $rg -o table

Description Name ProvisioningState ResourceGroup

------------- ------ ------------------- ---------------

dns Succeeded DNS

❯ az network manager connect-config list --network-manager-name dnsavnm -g $rg -o table

ConnectivityTopology DeleteExistingPeering Description IsGlobal Name ProvisioningState ResourceGroup

---------------------- ----------------------- ------------- ---------- ----------- ------------------- ---------------

Mesh False False fullmesh Succeeded DNS

Mesh False False dnsfullmesh Succeeded DNS

❯ az network manager group static-member list --network-manager-name dnsavnm -g $rg --network-group-name dns -o table

Name ProvisioningState ResourceGroup ResourceId

-------------- ------------------- --------------- -------------------------------------------------------------------------------------------------------------------------

ANM_kk2ybqy59f Succeeded DNS /subscriptions/e7da9914-9b05-4891-893c-546cb7b0422e/resourceGroups/dns/providers/Microsoft.Network/virtualNetworks/hub

ANM_wxdxdlwc3q Succeeded DNS /subscriptions/e7da9914-9b05-4891-893c-546cb7b0422e/resourceGroups/dns/providers/Microsoft.Network/virtualNetworks/spoke1

ANM_ww7u1ve567 Succeeded DNS /subscriptions/e7da9914-9b05-4891-893c-546cb7b0422e/resourceGroups/dns/providers/Microsoft.Network/virtualNetworks/spoke2

You can see as well some details about the configuration applied to a specific VNet, for example our spoke 1:

❯ az network manager list-effective-connectivity-config --virtual-network-name $spoke1_vnet_name -g $rg -o table --query 'value[].{Topology:connectivityTopology,IsGlobal:isGlobal,State:provisioningState,NetworkGroup:configurationGroups[0].id}'

Topology IsGlobal State NetworkGroup

---------- ---------- --------- --------------------------------------------------------------------------------------------------------------------------------------------

Mesh False Succeeded /subscriptions/e7da9914-9b05-4891-893c-546cb7b0422e/resourceGroups/DNS/providers/Microsoft.Network/networkManagers/dnsavnm/networkGroups/dns

We could be looking all day at AVNM CLI commands, but let’s have now a look at the effective routes of spoke 1 VM:

❯ az network nic show-effective-route-table -n spoke1clientvmVMNic -g $rg -o table Source State Address Prefix Next Hop Type Next Hop IP -------- ------- -------------------------- ---------------- ------------- Default Active 10.1.1.0/24 VnetLocal Default Active 100.64.0.0/10 VnetLocal Default Active 192.168.0.0/16 10.1.2.0/24 ConnectedGroup Default Invalid 0.0.0.0/0 Internet User Active 0.0.0.0/0 VirtualAppliance 192.168.1.4

You see the two local VNet prefixes (the non-overlapping 10.1.1.0/24 and the overlapping 100.64.0.0/10), and you see as well the prefixes coming from the AVNM peerings, which include only the non-overlapping ranges from the adjacent VNets (192.168.0.0/16 and 10.1.2.0/24).

Azure Route Server comes into the picture

You might be wondering why I am using the “mesh” topology in AVNM, if what I want to do is a hub and spoke. The reason is because the hub-to-spoke peerings deployed by AVNM are classical peerings, meaning that they wouldn’t support the overlapping IP spaces.

A downside of the “pseudo” VNet peerings created by AVNM in a mesh topology is that they don’t support the required settings UseRemoteGateways and AllowGatewayTransit for VPN and ExpressRoute gateways to allow connectivity to spokes. To compensate for that, we can use Route Server to advertise a summary for the spokes (I am using 10.1.0.0/16 in this lab) with the next hop being the Azure Firewall in the hub:

My traceroute [v0.92]

spoke1clientvm (10.1.1.4) 2022-11-14T16:59:32+0000 Keys: Help Display mode Restart statistics Order of fields quit

Packets Pings

Host Loss% Snt Last Avg Best Wrst StDev

1. 192.168.1.5 0.0% 89 2.3 2.8 1.8 8.9 1.2

192.168.1.7

2. 172.16.0.4 0.0% 89 6.3 6.9 5.1 21.3 2.8

That mtr output (BTW if you are not using mtr already you are missing out, in order to get proper load balancing across both firewall instances I am using TCP with mtr -T -P 22 172.16.0.4) shows that the traffic from the spoke to on-premises first traverses the Azure Firewall before going to onprem (172.16.0.4).

In case you didn’t know, Azure VPN gateways are invisible from a traceroute perspective (they don’t decrement the TTL).

If we look into the routes that the onprem VPN device is learning, we will see the summary advertised to provide connectivity to the spokes:

jose@mynva:~$ sudo birdc show route BIRD 1.6.3 ready. 10.1.0.0/16 via 192.168.0.4 on vti0 [vpngw0 21:30:12] ! (100/0) [AS65501i] 192.168.0.0/16 via 192.168.0.4 on vti0 [vpngw0 2022-11-13] ! (100/0) [AS65515i] 100.64.0.0/10 via 192.168.0.4 on vti0 [vpngw0 2022-11-13] ! (100/0) [AS65515i] 192.168.0.4/32 dev vti0 [kernel1 2022-11-13] * (254) 10.13.76.0/25 via 192.168.0.4 on vti0 [vpngw0 2022-11-13] ! (100/0) [AS65515i] 10.13.76.128/25 via 192.168.0.4 on vti0 [vpngw0 2022-11-13] ! (100/0) [AS65515i] 172.16.0.0/16 via 172.16.0.1 on eth0 [static1 2022-11-13] * (200)

We see the route being originated in ASN 65501, which is the one I have deployed in my Azure NVA. We can check as well in the VPN gateway (showing here only one of the two instances for simplicity):

❯ az network vnet-gateway list-learned-routes -n vpnvng -g $rg --query 'value[].{LocalAddress:localAddress, Peer:sourcePeer, Network:network, NextHop:nextHop, ASPath: asPath, Orig[16/1811$, Weight:weight}' -o table LocalAddress Peer Network ASPath Origin Weight NextHop

-------------- ----------- --------------- -------- -------- -------- -----------

192.168.0.5 192.168.0.5 192.168.0.0/16 Network 32768

192.168.0.5 192.168.0.5 10.13.76.128/25 Network 32768

192.168.0.5 192.168.0.4 10.13.76.0/25 IBgp 32768 192.168.0.4

192.168.0.5 192.168.2.4 10.13.76.0/25 IBgp 32768 192.168.0.4

192.168.0.5 192.168.2.5 10.13.76.0/25 IBgp 32768 192.168.0.4

192.168.0.5 192.168.0.4 172.16.0.4/32 IBgp 32768 192.168.0.4

192.168.0.5 192.168.0.4 172.16.0.0/16 65001 IBgp 32768 192.168.0.4

192.168.0.5 192.168.2.4 172.16.0.0/16 65001 IBgp 32768 192.168.0.4

192.168.0.5 192.168.2.5 172.16.0.0/16 65001 IBgp 32768 192.168.0.4 192.168.0.5 192.168.0.5 100.64.0.0/10 Network 32768 192.168.0.5 192.168.2.4 10.1.0.0/16 65501 IBgp 32768 192.168.1.4

192.168.0.5 192.168.2.5 10.1.0.0/16 65501 IBgp 32768 192.168.1.4

Wrapping up

Hopefully you have learned something with this post, even if it is only how flexible AVNM’s peerings can be. This specific setup might be used to save IP addresses in critical situations, but before going at this extreme, I would strongly encourage to use less complex configurations.

[…] Overlapping IP addresses in a hub-and-spoke network (feat. AVNM & ARS) […]

LikeLike