This is not a topology I would define as “best practice”, or one that I see in every Azure deployment out there, but I would certainly not describe it as exotic either. In this design, organizations want to leverage Azure as Internet breakout for their on-premises systems. Potentially because they do not have a good Internet access on-premises, or because their Internet proxies have already been migrated to Azure.

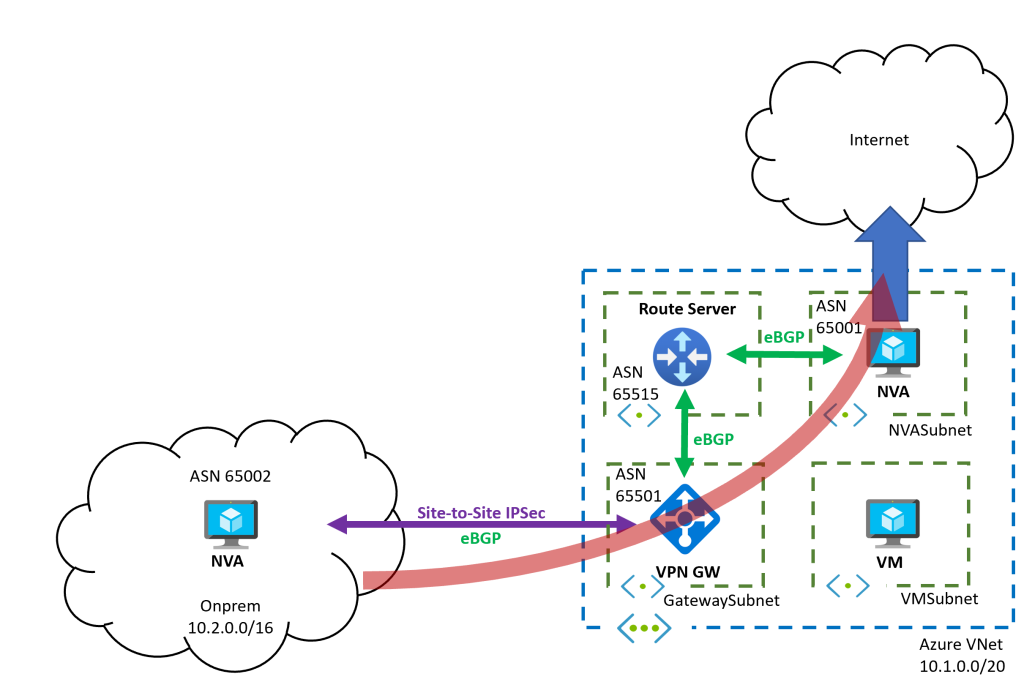

In this post I will test the following topology, where I am using Site-To-Site VPN to connect the on-premises network with Azure. This IPsec VPN can be replaced with ExpressRoute, from a functionality perspective it should be exactly the same thing (although I would definitely give it a test before going to production):

You can find an Azure CLI script to deploy this topology here. There are a couple of things that are worth noticing, which we will explore in detail later in this post:

- The Azure NVA will inject default routing in the network, that will be propagated to the on-premises network via BGP

- You need to create UDRs in the NVA’s subnet to override the learnt default and not have routing loops

- 0/0 routes is not advertised to onprem, but 0/1 and 128/1 are

- You don’t need any UDR in the GatewaySubnet

Advertising a default route from Azure

In order to attract traffic to Azure, we need to advertise a default route (0.0.0.0/0) from Azure. In Site-to-Site you might get away with static routing, but for ExpressRoute you would need BGP routing.

I have provisioned an Azure Route Server in the Azure VNet, and peered it with an NVA based on Ubuntu and bird. You can think of this NVA as your Internet egress firewall or your Internet proxy:

$ az network routeserver peering list --routeserver rs -g $rg -o table

Name PeerAsn PeerIp ProvisioningState ResourceGroup

------ --------- -------- ------------------- ---------------

nva 65001 10.1.2.4 Succeeded nvaThe NVA is injecting some routes We announce three routes from the NVA:

- The 1.1.1.1/32 is a test route that I inject to very route propagation without breaking anything

- 10.1.0.0/16 is the VNet prefix, which is to be sent to onprem over VPN, so that onprem knows how to reach Azure

- We have 0.0.0.0/0, which is the typical default route you would expect

- And we have split the default in two halves, 0.0.0.0/1 and 128.0.0.0/1, we will see why later on

$ az network routeserver peering list-learned-routes -n $nva_name --routeserver rs -g $rg --query 'RouteServiceRole_IN_0' -o table

LocalAddress Network NextHop SourcePeer Origin AsPath Weight

-------------- ----------- --------- ------------ -------- -------- --------

10.1.1.4 1.1.1.1/32 10.1.2.4 10.1.2.4 EBgp 65001 32768

10.1.1.4 10.1.0.0/16 10.1.2.4 10.1.2.4 EBgp 65001 32768

10.1.1.4 0.0.0.0/0 10.1.2.4 10.1.2.4 EBgp 65001 32768

10.1.1.4 128.0.0.0/1 10.1.2.4 10.1.2.4 EBgp 65001 32768

10.1.1.4 0.0.0.0/1 10.1.2.4 10.1.2.4 EBgp 65001 32768There is an Active/Active VPN Gateway to provide hybrid connectivity (note that Active/Passive VPN gateways are not supported together with Azure Route Server). Looking at the BGP peerings of the gateway, we can see that it is peered with both Route Server instances (10.1.1.4 and .5), as well as with the onprem device (10.2.2.4). The last adjacencies that you see are the iBGP connections between the two VPN gateway instances (10.1.0.4 and .5).

Note that the table is actually a concatenation of the neighbors of both VPN instances, so you need to look at each half separately (I have inserted a line break to help visualizing the two parts).

$ az network vnet-gateway list-bgp-peer-status -n vpngw -g $rg -o table

Neighbor ASN State ConnectedDuration RoutesReceived Sent Received

---------- ----- --------- ------------------- ---------------- ------ -------

10.1.1.4 65515 Connected 05:48:12.8577060 6 409 412

10.1.1.5 65515 Connected 05:48:14.1232289 6 409 411

10.2.2.4 65002 Connected 00:55:28.7807900 3 74 79

10.1.0.4 65501 Unknown 0 0 0

10.1.0.5 65501 Connected 05:48:11.9671916 7 417 419

10.1.1.4 65515 Connected 05:48:11.9799755 4 413 407

10.1.1.5 65515 Connected 05:48:11.8549756 4 410 411

10.2.2.4 65002 Connected 00:55:28.5877473 3 75 80

10.1.0.4 65501 Connected 05:48:10.1205981 7 417 419

10.1.0.5 65501 Unknown 0 0 0And we can verify that the VPN Gateways learn all routes from the Azure Route Server. Here again, we have divided the output in two halves (one per VPN gateway instance). Note that all five routes that the Azure Route Server learnt from the Azure NVA are present, the routes with 65515-65001 path:

$ az network vnet-gateway list-learned-routes -n vpngw -g $rg -o table

Network Origin SourcePeer AsPath Weight NextHop

----------- -------- ------------ ----------------- -------- ---------

10.1.0.0/16 Network 10.1.0.4 32768

1.1.1.1/32 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

1.1.1.1/32 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

1.1.1.1/32 IBgp 10.1.0.5 65515-65001 32768 10.1.0.5

10.2.2.4/32 Network 10.1.0.4 32768

10.2.2.4/32 IBgp 10.1.0.5 32768 10.1.0.5

2.2.2.2/32 EBgp 10.2.2.4 65002 32768 10.2.2.4

2.2.2.2/32 IBgp 10.1.0.5 65002 32768 10.1.0.5

10.2.0.0/16 EBgp 10.2.2.4 65002 32768 10.2.2.4

10.2.0.0/16 IBgp 10.1.0.5 65002 32768 10.1.0.5

0.0.0.0/0 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

0.0.0.0/0 IBgp 10.1.0.5 65515-65001 32768 10.1.0.5

0.0.0.0/0 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

10.1.0.5/32 EBgp 10.2.2.4 65002 32768 10.2.2.4

0.0.0.0/1 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

0.0.0.0/1 IBgp 10.1.0.5 65515-65001 32768 10.1.0.5

0.0.0.0/1 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

128.0.0.0/1 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

128.0.0.0/1 IBgp 10.1.0.5 65515-65001 32768 10.1.0.5

128.0.0.0/1 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

2.2.2.2/32 EBgp 10.1.1.4 65515-65501-65002 32768 10.1.1.4

2.2.2.2/32 EBgp 10.1.1.5 65515-65501-65002 32768 10.1.1.5

10.2.0.0/16 EBgp 10.1.1.4 65515-65501-65002 32768 10.1.1.4

10.2.0.0/16 EBgp 10.1.1.5 65515-65501-65002 32768 10.1.1.5

10.1.0.0/16 Network 10.1.0.5 32768

1.1.1.1/32 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

1.1.1.1/32 IBgp 10.1.0.4 65515-65001 32768 10.1.0.4

1.1.1.1/32 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

10.2.2.4/32 Network 10.1.0.5 32768

10.2.2.4/32 IBgp 10.1.0.4 32768 10.1.0.4

2.2.2.2/32 EBgp 10.2.2.4 65002 32768 10.2.2.4

2.2.2.2/32 IBgp 10.1.0.4 65002 32768 10.1.0.4

10.2.0.0/16 EBgp 10.2.2.4 65002 32768 10.2.2.4

10.2.0.0/16 IBgp 10.1.0.4 65002 32768 10.1.0.4

10.1.0.4/32 EBgp 10.2.2.4 65002 32768 10.2.2.4

0.0.0.0/0 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

0.0.0.0/0 IBgp 10.1.0.4 65515-65001 32768 10.1.0.4

0.0.0.0/0 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

0.0.0.0/1 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

0.0.0.0/1 IBgp 10.1.0.4 65515-65001 32768 10.1.0.4

0.0.0.0/1 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5

128.0.0.0/1 EBgp 10.1.1.4 65515-65001 32768 10.1.1.4

128.0.0.0/1 IBgp 10.1.0.4 65515-65001 32768 10.1.0.4

128.0.0.0/1 EBgp 10.1.1.5 65515-65001 32768 10.1.1.5However, if we now have a look at the routes that the VPN gateways advertise to the on-premises appliance over VPN, we will notice that they only advertise the /1 routes, but not the /0 (other than the 1.1.1.1/32 test route and the 10.1.0.0/16 VNet prefix):

$ az network vnet-gateway list-advertised-routes -n vpngw -g $rg --peer $onprem_nva_private_ip -o table

Network NextHop Origin AsPath Weight

----------- --------- -------- ----------------- --------

10.1.0.0/16 10.1.0.5 Igp 65501 0

0.0.0.0/1 10.1.0.5 Igp 65501-65515-65001 0

128.0.0.0/1 10.1.0.5 Igp 65501-65515-65001 0

1.1.1.1/32 10.1.0.5 Igp 65501-65515-65001 0

10.1.0.0/16 10.1.0.4 Igp 65501 0

0.0.0.0/1 10.1.0.4 Igp 65501-65515-65001 0

128.0.0.0/1 10.1.0.4 Igp 65501-65515-65001 0

1.1.1.1/32 10.1.0.4 Igp 65501-65515-65001 0Essentially, this means that the Azure VPN Gateway does not re-advertise the 0.0.0.0/0 for some reason. I can only guess about the actual why (my guess would be that there is a static default route in the VPN Gateway preventing it from learning the 0.0.0.0/0 advertised over BGP).

And the onprem appliance (simulated here with another VM running in a separate VNet) learns those routes sure enough, including the two /1 routes:

$ ssh $onprem_nva_pip_ip "sudo birdc show route"

BIRD 1.6.3 ready.

2.2.2.2/32 via 10.2.2.1 on eth0 [static1 21:42:20] * (200)

1.1.1.1/32 via 10.1.0.4 on vti0 [vpngw0 21:49:53] ! (100/0) [AS65001i]

via 10.1.0.5 on vti1 [vpngw1 21:49:53] (100/0) [AS65001i]

0.0.0.0/1 via 10.1.0.4 on vti0 [vpngw0 21:49:50] ! (100/0) [AS65001i]

via 10.1.0.5 on vti1 [vpngw1 21:49:48] (100/0) [AS65001i]

10.2.0.0/16 via 10.2.2.1 on eth0 [static1 21:42:20] * (200)

10.1.0.0/16 via 10.1.0.4 on vti0 [vpngw0 21:42:25] ! (100/0) [AS65501i]

via 10.1.0.5 on vti1 [vpngw1 21:42:25] (100/0) [AS65501i]

10.1.0.5/32 dev vti1 [kernel1 21:42:20] * (254)

10.1.0.4/32 dev vti0 [kernel1 21:42:20] * (254)

128.0.0.0/1 via 10.1.0.4 on vti0 [vpngw0 21:49:50] ! (100/0) [AS65001i]

via 10.1.0.5 on vti1 [vpngw1 21:49:48] (100/0) [AS65001i]Default routing for Azure VMs

In the ARS Vnet, all routes are plumbed into the effective NICs. However, take into account that in peered VNets the 0.0.0.0/0 wouldnt be injected, only the /1 routes. This is the second reason why you might want to have those two /1 routes instead of the single /0:

$ az network nic show-effective-route-table --ids $azurevm_nic_id -o table

Source State Address Prefix Next Hop Type Next Hop IP

--------------------- ------- ---------------- --------------------- -------------

Default Active 10.1.0.0/16 VnetLocal

VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.0.5

VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.0.4

VirtualNetworkGateway Active 128.0.0.0/1 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Active 0.0.0.0/1 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Active 0.0.0.0/0 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Active 2.2.2.2/32 VirtualNetworkGateway 10.1.0.5

VirtualNetworkGateway Active 2.2.2.2/32 VirtualNetworkGateway 10.1.0.4

VirtualNetworkGateway Active 1.1.1.1/32 VirtualNetworkGateway 10.1.2.4Note that these routes are injected in the NVA subnet as well. This would provoke a routing loop for Internet packets: the NVA sends the Internet packets through its NIC, and the effective route returns the packet to the NVA.

As a consequence, we need to overwrite the Internet routes with UDRs. You could use a route table with Gateway Route Propagation disabled, but we do want to learn other BGP routes such as the onprem IP space, for example:

$ az network nic show-effective-route-table --ids $nva_nic_id -o table

Source State Address Prefix Next Hop Type Next Hop IP

--------------------- ------- ---------------- --------------------- -------------

Default Active 10.1.0.0/16 VnetLocal

VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.0.5

VirtualNetworkGateway Active 10.2.0.0/16 VirtualNetworkGateway 10.1.0.4

VirtualNetworkGateway Active 2.2.2.2/32 VirtualNetworkGateway 10.1.0.5

VirtualNetworkGateway Active 2.2.2.2/32 VirtualNetworkGateway 10.1.0.4

VirtualNetworkGateway Active 1.1.1.1/32 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Invalid 0.0.0.0/0 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Invalid 0.0.0.0/1 VirtualNetworkGateway 10.1.2.4

VirtualNetworkGateway Invalid 128.0.0.0/1 VirtualNetworkGateway 10.1.2.4

User Active 0.0.0.0/0 Internet

User Active 0.0.0.0/1 Internet

User Active 128.0.0.0/1 InternetInternet traffic flows through the Azure NVA

The Azure NVA has been configured for SNAT Internet traffic (everything addressed to ranges outside of 10.0.0.0/8 in this example). Enlarging this to include all your private IP address space would be required:

$ ssh $nva_pip_ip "sudo iptables -t nat -L"

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- anywhere !10.0.0.0/8 We can use ifconfig.co to verify that connections are coming from the Azure NVA, both for Azure and onprem systems:

$ echo "Connectivity will be sourced from the Azure NVA, $nva_pip_ip:"

Connectivity will be sourced from the NVA, 137.117.186.165:

$ ssh $onprem_nva_pip_ip "curl -s4 ifconfig.co"

137.117.186.165

$ ssh -J $nva_pip_ip $azurevm_private_ip "curl -s4 ifconfig.co"

137.117.186.165Internal traffic does not flow through the NVA

Connectivity between onprem and Azure (to a test virtual machine) flows over the VPN tunnel. The traffic goes from the onprem VPN appliance to the Azure VPN Gateway, then directly to the VM. You can see this because of the effective routes in the VPN Gateway above and for the

$ ssh $onprem_nva_pip_ip "ping $azurevm_private_ip -c 5"

PING 10.1.10.4 (10.1.10.4) 56(84) bytes of data.

64 bytes from 10.1.10.4: icmp_seq=1 ttl=64 time=4.45 ms

64 bytes from 10.1.10.4: icmp_seq=2 ttl=64 time=3.90 ms

64 bytes from 10.1.10.4: icmp_seq=3 ttl=64 time=4.43 ms

64 bytes from 10.1.10.4: icmp_seq=4 ttl=64 time=5.68 ms

64 bytes from 10.1.10.4: icmp_seq=5 ttl=64 time=5.00 ms

--- 10.1.10.4 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4005ms

rtt min/avg/max/mdev = 3.908/4.696/5.689/0.611 ms If you want to secure internal traffic, you can insert an Azure Firewall or an NVA in this design. The Azure Route Server could be used here too if the NVA supports BGP, but you would have to be very careful with the routing design. I will leave this conversation for a future post.

Summary

In this post we have seen how to advertise a default route from Azure to on-premises to attract Internet traffic. We had to split the default route in two halves (0.0.0.0/1 and 128.0.0.0/1) so that it is propagated by the VPN Gateway. We verified that Internet egress traffic both from on-premises and from Azure workloads in the VNet flow over our Network Virtual Appliance.

Thanks for reading!

hi we do have a similar setup where expressroute and vpn gatway co-exixting needs to enable internet for router living behinde the expressroute gateway, we do use a route server and Azure firewall as NVA , can you please help ?

LikeLike

Hey Isthi! With Virtual WAN you could easily advertise a default route to the ER location, with ARS it is more complex: first of all you need some box that advertises the 0.0.0.0/0 to the ARS via BGP (possibly with the AzFW as next hop). Second, that route would go to both ER and VPN, ARS doesn’t support filtering routes today.

LikeLike

Thanks i appreciate your answer and I tried but i think MS- Edge Routers are blocking our internet traffic coming from on-prem router , will that be possible thing ?

LikeLike

That would surprise me. MSEEs shouldn’t block any traffic. If routing is right, packets should flow. Do you receive the 0.0.0.0/0 onprem correctly?

LikeLike

no i don’t receive 0.0.0.0/0 in my on-prem cisco router, would i need to implement a vwan to enable this ?, do i need to move my firewall and route server to vwan then ? . I really appreciate your answers

LikeLike

Well, if you want to attract Internet traffic from onprem to Azure, you need to somehow advertise the 0.0.0.0/0 towards onprem. If you are using Route Server, you need to advertise that 0.0.0.0/0 from an NVA to ARS. Here you have an example with AVS, but the architecture with onprem is exactly the same: https://learn.microsoft.com/azure/azure-vmware/concepts-network-design-considerations

LikeLike